Thomas R. Jeffrey, PhD

Abstract

Artificial intelligence (AI) is a new technology paradigm with the potential to greatly benefit humankind, but it has just as much potential to have a negative impact. Unlike older technologies focused on doing specific tasks better than humans, AI is being designed to learn and make decisions as if it were human. The ethical implications of such an objective are complex, and society’s ever-increasing dependency on AI should give rise to concern about how humans will coexist with this new technology. Despite its pervasiveness in our lives there is very little literature regarding how much individuals understand about AI or how its impact on humankind is perceived. This study explores Generation Z perceptions of AI perils and the need for ethical discussions. The results of this study indicate a slight contradiction in attitudes surrounding AI ethics. While participants believed that discussion regarding the ethical development and use of AI were needed many of them were not aware of such discussions. Participants were also more concerned about the long-term impact of AI ethics than about the impact of AI ethics in near-term

Introduction

Arguably, technology is humankind’s most valuable resource; one on which every segment of society has become dependent. We rely on technology to do everything from the menial and mundane to the advanced and incredible. From an historical perspective, technology has been fundamental to the progress of individuals and nations. It has not only transformed how we live but who we have become and will become (Coleman 2019, 23). The most dominant iteration of technology in today’s world is artificial intelligence (AI) because it has the potential to change the very trajectory and well-being of society. The desire to capture this potential has spawned the relentless development of new forms and uses of AI in a technology explosion that has been dubbed the Fourth Industrial Revolution (Schwab 2016). Though interest in AI goes back to the mid-20th Century, it never found much of a foothold outside of academia (Bostrom 2017, 6). It is only within the last two decades that AI development has begun to overcome the challenges of economic and programmatic viability to become commonplace in our everyday lives. Examples of everyday AI range from recommendation engines to facial recognition to autonomous self-driving cars to intelligent assistants in our homes. Indeed, AI has made the transition from academic novelty to an everyday necessity where it has become increasingly more pervasive and ubiquitous.

The improvements that AI has brought to industries like healthcare, education, and transportation are impressive and there is optimism that AI will be instrumental in “the advancement of vital public interests and sustainable human development” (Leslie 2019, 3). Despite such optimism about the potential of AI, the reality is that AI has just as much of a downside as it does an upside. Unfortunately, AI development is largely based upon the ability of industry and government to commoditize it to achieve competitive advantage; an objective that does not necessarily align with the public good (Crawford 2021, 211). Indeed, conventional wisdom seems to indicate that AI is the future and those not willing to embrace it will be left behind. To put the global importance of AI into perspective, consider that business consultants PwC (2019) report that both of the world’s largest economies, the United States of America and China, are expected to use AI to grow their economies by a combined $10.7 trillion by 2030, accounting for 70% of the world’s economic impact. Thus, to ignore the economic and political incentive behind AI development risks a dissonance between its potential for positive outcomes and negative outcomes, perhaps even catastrophic ones.

The negative potential of AI cannot be dismissed as futuristic science fiction ideations about super-intelligent robots gone awry. In reality, AI development is already undermining individual autonomy and fragmenting social structures (Coleman 2019, 107). Because AI has such far reaching implications, the most salient question we need to be asking right now is about how humans foresee coexisting with technology. The paradox of AI is in its perceived neutrality. Considering its reach and potential, the idea that AI is merely a tool that is useful only in the way its creator develops and implements it is not a comforting proposition. The pace and scope of AI development should be cause for concern and give rise to a wide range of practical and philosophical questions. The overarching question may not be about how AI will impact future generations, but rather, how is it already impacting individuals and society? Generation Z (Gen Z) is the first cohort of individuals in the current era to be fully immersed in a techno-centric world from birth (Dimock 2019). Gen Z experiences AI as part of everyday life and will see its influence on them increase even more so. This study seeks to explore how Generation Z perceives AI and the level of concern about the need for ethical direction of AI research and development.

Literature Review

Despite being a household term, one might legitimately ask their own AI agent, “what is AI?” One answer provided by AI about AI is “… the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings” (Copeland 2021, para. 1). That answer seems straightforward. Now, ask the AI “What does AI do?” That answer gets complicated and therein is the challenge for the common person. Defining what AI is and does can soon get muddled with subjectivity ranging from the philosophical to the conceptual to the technical because it crosses so many disciplines. It is easy to see why the average person may not really understand AI nor want to get bogged down in definitions that are jargon filled nuances of discreet differences. While these distinctions are important at the academic and technical levels, they only add to the confusion and do little to establish common ground for general discussions. However, we need not understand how something works to consider its usefulness, nor to judge it’s benefits and risks. Just as we do not understand the human condition, we strive to create parameters for an equal and just society.

As a technological innovation, AI is different because it is not just a technology upgrade, it is the replacement of one technology with a completely different species of technology (Pfaff 2019, 129). Artificial intelligence is disruptive in a way that is challenging our individual and social norms in positive and negative ways (Haggstrom 2016, 38). It is for this very reason that its efficacy needs to be more widely and openly discussed, especially since the negative potential of AI has already surfaced. If these negative effects of AI are hard to deal with in the present, then dealing with them in the future will only get more difficult. The challenge becomes an exponential one. Especially because the difference between what AI can do now versus what it will be able to do in the future is hard to gauge. The challenge is to not underestimate its potential by limiting our thinking to the anthropomorphizing of technology based on a scale of measuring intelligence that is only relative to our human understanding (Bostrom 2017). Human intelligence may be a mere fraction of what a super-intelligent AI might come to possess. What if AI is developed that is far superior to that of even the most intelligent human? By limiting our evaluation of AI to the capacity of human intelligence, we may be underestimating AI and its threat to humankind. Especially considering how much of our lives are reliant upon technology.

Our dependence on technology alone should be a cause of concern about the development of AI. Technology dependence has been gradual but all-encompassing for society; “like the frog in a pot, we have been desensitized to the changes wrought by the rapid increase and proliferation of information technology” (Markoff 2016, 23). Such a metaphor seems appropriate given that so much of our lives have come to rely on the ever-increasing utilization of AI enhanced products and services. It is difficult to find any industry, organization, or nation that is not seeking to leverage AI for its potential benefits. Even considering the potential benefits of AI is troublesome because a benefit to one may be a detriment for another. For example, consider the replacement of human workers with machine workers; the promise that the technology will eventually create better jobs for those displaced is not a sure thing and is no consolation for those losing their livelihood.

That AI will replace humans in the workforce is something with which we are already dealing. Across all sectors, both labor-intensive and skill-based tasks are being assigned to machines. The use of AI is poised to replace humans in the workforce on many levels, such as: (a) automation of physical labor, (b) customer interactions, and (c) decision making (Agrawal, Gans and Goldfarb 2019, 32). While many of us are aware that AI is used in manufacturing, it is less well known that AI is more and more a part of the decision-making process in many industries. In their study of the impact of AI, researchers Agrawal et al (2019) highlight a number of the advances made in AI across a number of industries and focus more narrowly on a study of radiology that showed many of the tasks and skills necessary in the field could be done with AI (pp 41-43). At risk is not just the loss of jobs but the continued rise of socio-economic disparity (Dubhashi and Lappin 2017). The tendency in any technology advancement is toward the benefit of the wealthy because those with capital to invest will take advantage of opportunity. The result of technology innovations such as AI is that economic growth occurs without new jobs being created; thus, widening an already breathtaking wealth and power gap (Coleman 2019, 153).

We should be concerned that AI will amplify inequality and bias. At issue here is that a machine may have bias embedded into its programming (intentionally or unintentionally) or it could learn bias based upon the data it consumes. One example of data created bias is when the Microsoft Tay computer had to be taken offline when it began using insensitive and objectionable language based on what it learned from online conversations (Schwartz 2019, para. 1). On the other hand, bias can be programmed directly into the AI system. Technology reporters Safiya Noble and Sarah Roberts (2016, para. 1) provide an example of intentional bias in a report criticizing Facebook’s practice of enabling advertisers to exclude listing ads for housing and employment to audiences based on race; interestingly, Facebook does not ask for race/ethnicity and instead uses proprietary algorithms and data gleaned from user posts to make such decisions. It should be noted that market segmentation is already a contentious marketing activity because it is used to categorize individuals based on behavioral, demographic, economic, and psychographic data; a process that many consider stereotyping (Frith and Mueller 2010, 110). While the use of AI systems enables marketers to gain an extraordinarily rich understanding of what motivates consumer behavior (Urban, et al. 2020, 74) these same systems carry the very real risk of both intentional and unintentional bias (Tong, Luo and Xu 2020, 75). This is unsettling for a number of reasons, not least of which is that it becomes difficult to assign accountability because these AI systems are developed under the guise of propriety systems of personalization and customization (Turow 2017, 247). These closed, proprietary systems make it very difficult for anyone to know, much less prove, that bias, disinformation, or even discrimination has occurred as a result of the AI (Katyal 2019).

The loss of individual autonomy and decision-making should also be a concern about AI. In particular, ceding control to machines to make decisions for us leads to a loss of cognitive function and degeneration (Danaher 2018, 634). This is especially problematic as individuals become more dependent on direction from technology and no longer take responsibility for making choices (Leslie 2019, 5). Technology that allows individuals to share their preferences has become a technology that dictates preferences for them. In effect, constantly surveilling our every move, a whole industry has arisen whose sole purpose is to facilitate opportunity for creating influence on us without us realizing it (Zuboff 2019, 178). It is not just that AI has the potential to manipulate humans, through disinformation or limiting information, AI has the potential to divide society and strengthen the structures of power and industry that already have large amounts of power (Padios 2017, 220). Artificial intelligence is not a leveling of the playing field; instead, it is designed to “amplify and reproduce the forms of power it has been deployed to optimize” (Crawford 2021, 224).

We should be concerned about the military uses of AI. It is no secret that the US military and intelligence agencies have developed AI for uses ranging from surveillance to autonomous vehicles to logistics. In fact, the interest is so great that the Defense Advanced Research Project Agency (DARPA) holds AI/robotics competitions to garner interest in development and research from the public (Markoff 2016, 24). Spending on DARPA projects alone are slated to increase 500% ($249M) in the coming years due to fears of falling behind China in next generation technologies (Heaven 2020). Military strategist Anthony Pfaff (2019, 141) concludes that the development of autonomous weapons is inevitable and, if done properly, it could act as a deterrent, but if done improperly, it could result in increased militarization and war atrocities, and decreased accountability.

As society becomes more reliant upon AI it is critical that its development be controlled and regulated with the guiding principle being to consider what is best for all of humankind. Technology Journalist John Markoff (2016, 325) warns that the issue AI raises is that of designing humans into or out of the process; at which point the question needs to be asked, “who gets to make that decision?” Philosopher Nick Bostrom (2017, 256) suggests that the question is not only about “who” gets to make decisions about AI, but also, “why?” This brings us back to the paradox of AI neutrality, because the level to which that benefit is transferred to society in general is dependent upon the designers and owners of the AI (Markoff 2016, 327). The other aspect of control that concerns AI design and development is that of unknown risks and what that could mean to humanity. Computer engineering professor and author Utku Kose (2018) posits that it is time to consider the existential ethics of developing machines that could possibly learn something negative and perpetuate it throughout the system or develop some negative behavior that puts human lives at stake, especially if the risk is global and interminable. It is no comfort then, that “As with any new and rapidly evolving technology, a steep learning curve means that mistakes and miscalculation will be made and that both unanticipated and harmful impacts will inevitably occur” (Leslie 2019, 3). We have passed the point of no return, but not the point of more thoughtful strategies for moving forward.

Purpose of the Study

The literature indicates a concern about how AI will impact society, yet, little is known about the level of awareness or perceptions of AI held by individuals. Especially, by Generation Z that has never known a life without it. Gen Z is the first generation to be immersed in technology from birth and may not fully understand the full impact of the trade-off that society has made for them with that technology. In previous studies regarding the perception of AI by Gen Zers it was found that: a) participants have a positive view of AI but are indifferent, confused, and poorly informed about it (Gherhes and Obrad 2018, 14), b) despite a positive view of AI there was anxiety due to the inevitability of AI advances and the uncertainty of how AI will impact the individual and society (Jeffrey 2020, 12), and c) when it comes to marketing, there is a low level of concern that AI is used in marketing but high levels of concern about data privacy, profiling, stereotyping, and manipulation (Jeffrey 2021, para. 42). Because the potential for positives and negatives of AI is so high, it is important to gain a better insight into how Gen Zers perceive current and future challenges of AI. The purpose of this study is to investigate Gen Z perceptions of: 1) the perils and threats of AI, and 2) the need for business and political discussion regarding ethical concerns about AI development. The result of this study will contribute to a better understanding of how Gen Zers perceive AI and the need for ethical discussions about AI development. The significance of this study is that it will provide further evidence of the perceived impact of AI on our lives and spawn further research into how humans will coexist with technology.

Study Design

This study is a continuation of previous studies regarding Gen Z perceptions of AI (Jeffrey 2020; Jeffrey 2021). This is a non-experimental study designed to use descriptive statistics to investigate a phenomenon and correlational statistics to identify if there exist any relationship between study variables that warrant more research (McMillan 2004). The study design utilized a convenience sampling methodology. Respondents were students enrolled as undergraduates in a medium-sized, private, four-year liberal arts institution located in the midwestern United States.

The research instrument consisted of unique items developed by the research and administer via in March of 2019. The items included in the research instrument consisted of four demographic questions, six closed-ended questions, and a single open-ended question. Bivariate correlation was used to analyze the data from the closed-ended questions. This method is useful and appropriate when identifying and describing variable relationships in a non-experimental design. Bivariate correlation measures the direction and relative strength of the relationship between variables using the Pearson product moment correlation coefficient (Pearson’s r) (Howell 2007; Mcmillan Pedhazur and Schmelkin 1991). The study design does not measure between group differences nor employee any qualitative means of analysis of the open-ended question, although some responses may warrant being used as clarifying data.

Study Results

There are 147 responses to the study that completed all questions. Only full responses are being used for reporting. Demographic results show that 25% of participants reported being 21 years old (n=37) followed by 20-year-olds (22.4%, n=33) and 19-year-olds (21.8%, n=32). For gender, 58.5% (n=86) reported as female and 41.5% (n=61) reported as male. When it comes to ethnicity over three-quarters (76.9%, n=113) of respondents reported as White. The college rank of Junior was reported by the most participants (28.6%, n=42) followed by Seniors (27.2%, n=40). Findings for college major show Business and Technology being reported by the largest number of participants (27.2%, n=40) followed by the majors of Social Sciences, History, and Theology being reported by 15.6% (n=23) of participants and the majors of Nursing and Healthcare being reported by 15.0% (n=22) of participants. The demographic characteristics of all participants are shown in the Appendix.

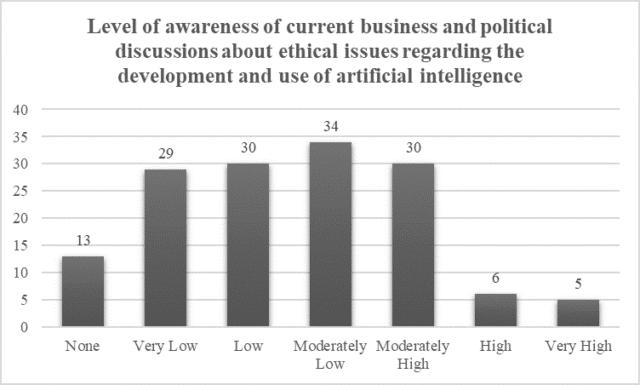

When asked about the student’s level of awareness of current business and political discussion about ethical issues regarding the development and use of AI the largest number of participants (n=34) reported a low level of awareness (M=3.52, SD=1.5). Almost half (49.0%) of participants reported a level of awareness in the low to none ranges. See Figure 1 for the results of participant responses to this question.

Figure 1.

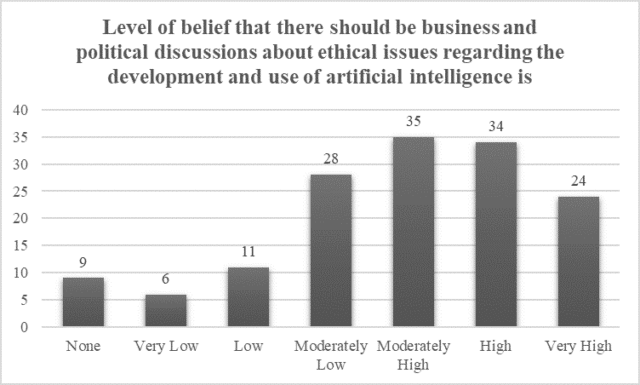

When asked about the student’s level of belief that there should be business and political discussion about ethical issues regarding the development and use of AI the mean score fell into the moderately high range (M=4.85, SD=1.648). For this question, nearly two-thirds (63.2%, n=93) reported a moderately high to very high level of belief that there should be discussions of ethical issues regarding AI development. Figure 2 shows the results for this question.

Figure 2.

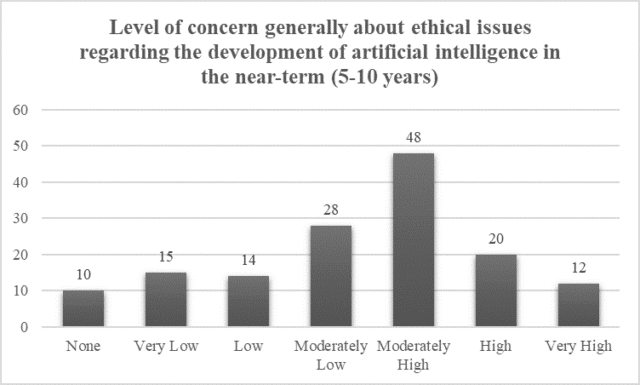

When asked about the student’s level of concern about ethical issues regarding the development of artificial intelligence in the near term (5-10 years) the mean score for participant responses fell into the moderately low range (M=4.34, SD=1.620). The largest number of participants reported a moderately high level of concern (32.7%, n=48). Shown in Figure 3 are the results for this question.

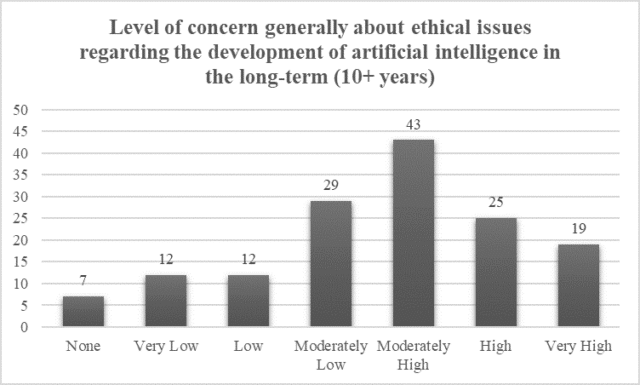

When asked about the student’s level of concern about ethical issues regarding the development of artificial intelligence in the long-term (10+ years) the mean score for participant responses fell into the moderately high range (M=4.63, SD=1.609). For this question, the largest number of participant responses (29.3%, n=43) were in the moderately high range and nearly half (59.2%, n=87) of participant responses fell into the moderately high to very high level of concern. Figure 4 shows the results for this question.

Figure 3.

Figure 4.

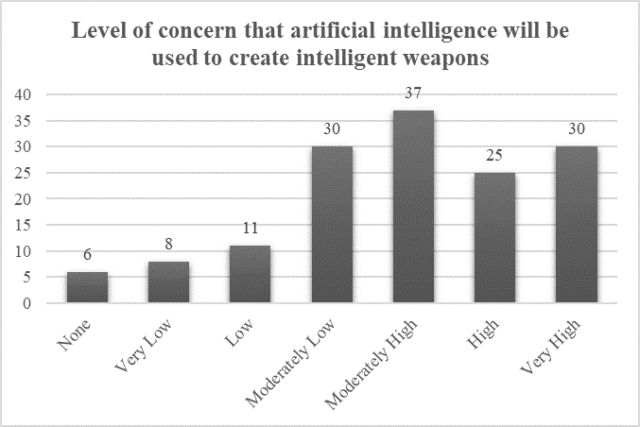

When asked about the student’s level of concern that artificial intelligence will be used to create intelligent weapons the mean score fell into the moderately high range (M=4.90, SD=1.609). While 37.4% (n=55) of participants reported a moderately low to no level of concern, those participants reporting a moderately high to very high level of concern were 62.6% (n=92). The results for all participants for this question is shown in Figure 5.

Figure 5.

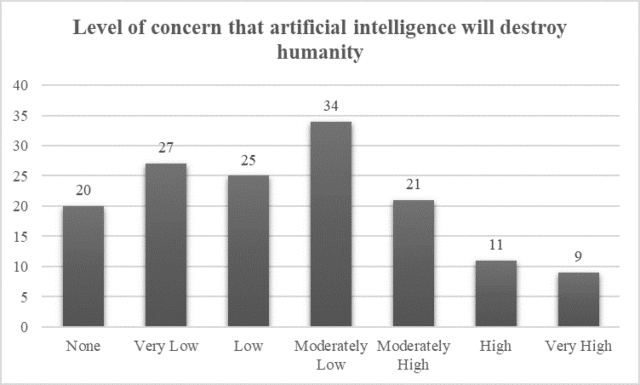

Figure 6.

Bivariate correlation was used to identify any relationships among the six random AI variables. The results are shown in Table 1 as a correlation matrix consisting of the Pearson’s r correlation for all six variables. The results of the analysis found that when there was a significant correlation it was positive and that the strongest correlations were between: a) concern about ethical issues in the near-term (5-10 years) and concern about ethical issues in the long-term (10+ years), r(147) = .766, p<.001, b) concern about ethical issues in the near-term (5-10 years) and belief that there should be business and political discussions about ethical issues regarding the development and use of AI, r(147) = .653, p<.001, c) concern about ethical issues in the long-term (10+ years) and belief that there should be business and political discussions about ethical issues regarding the development and use of AI, r(147) = .632, p<.001, and d) concern that AI will be used to create intelligent weapons and of concern that artificial intelligence will destroy humanity , r(147) = .507, p<.001. The indications from these findings is that those participants that are concerned about the ethical development and use of AI, be it short-term or long-term, are also more likely to believe that there should be business and political discussions about ethical issues regarding AI. It also appears that those participants that are concerned about the development of AI weapons are also more likely to believe that AI will destroy humanity.

Table 1Correlation Matrix for Level Variables |

||||||

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 1. Awareness of discussions about AI | – | |||||

| 2. Belief there should be AI discussions | .458** | – | ||||

| 3. Concern about AI near-term | .372** | .653** | – | |||

| 4. Concern about AI long-term | .406** | .632** | .766** | – | ||

| 5. Concern about AI intelligent weapons | .118 | .322** | .464** | .447* | – | |

| 6. Concern that AI will destroy humanity | .158 | .075 | .303** | .308* | .507** | .466** |

**p < .001 (2-tailed)

* p < .05 (2-tailed)

Conclusions and Discussion

The purpose of this study is to investigate Gen Z perceptions of: 1) the perils and threats of AI, and 2) the need for business and political discussion regarding ethical concerns about AI development. Results from this study indicate that while most participants (72.0%, n=106) had little awareness of current business and political discussions about ethical issues regarding the development and use of artificial intelligence nearly two-thirds (63.2%, n=93) believe that these ethical discussions should take place. Participants were more concerned about ethical issues regarding the development of AI in the long-run (mean score of 4.63 = moderately high range) than in the near-term (mean score of 4.34 = moderately low range). The bivariate correlation analysis of these study variables found a strong, positive correlation between a belief that there needs to be business and political discussions about ethical issues regarding AI and both short-term ethical concerns about AI (r(147) = .653, p<.001) and the long-term ethical concerns about AI (r(147) = .632, p<.001). This finding indicates that those with ethical concerns about AI, be it in the long-term or short-term, were also more likely to believe that discussions about AI ethics were needed. In terms of perils to humanity, the study found that participants were more concerned about the development of AI weapons than about AI destroying humanity, with 37.4% (n=55) and 13.5% (n=20) reporting a high or very high concern, respectively. The conclusion is that participants are concerned about ethical issues regarding the development and use of AI, in particular the weaponization AI, and believe that there is a need for business and political discussions on these topics, especially in the long-term.

Technological progress is often viewed as inevitable and that humans must adapt to it rather than it adapting to our needs, and for many this can feel overwhelming. It is time to discuss AI development in regards to machine ethics and safety as a means of lessening the anxiety in the discipline and society (Kose 2018). The need to push limits and discover new horizons has been a benefit to humanity, but that does not mean we need to ignore the warning signs. Author Tad Friend (2018, 45) ponders, “Precisely how and when will our curiosity kill us?” Some may see this question as an over-reaction in light of being in the early stages of the AI revolution. However, there are many voices prompting industry and governments to come to terms with the ethical and safety aspects of AI sooner rather than later; particularly, when it comes to the possibility that machines could learn something wrong or develop negative behavior that poses a terminal threat to humankind or the environment. Markoff (2015 p. 339) posits that the defining question of the current era is, “What will the relationship be between humanity, AI, and robots?” The answer to this question and all those related to the direction of AI development should be based upon “human-centered design” (p. 343).

Technology, generally speaking, is viewed as necessary, beneficial, and inevitable. Seldom do we consider what we are giving up in return for technologies benefits. However, control and regulation are of the essence with AI because it has become too big to unplug. In considering the future of intelligent machine, Markoff (2015) ponders whether we will have control of technology or will it have control over us? “When a revolution happens, the consequences are not obvious straight away, and to date, there is no uniformly adapted framework to guide AI research to ensure a sustainable societal transition” (Kusters, et al. 2020, 1). Since there is no internationally governing body to provide guidance on control and regulation of AI, there is no way to discern whether AI development is in alignment with human and societal goals. In fact, humanity is mostly relying on the benevolence of the people and institutions that own and develop AI to consider the ethics of their creation (Markoff 2016, 333).

There are existing examples of how to control and regulate in these unprecedented times, such as, nuclear and biotech. One place to start is in creating a common vocabulary that represents both the ethical and practical aspects of AI to allow for appropriate discourse. Leslie (2019, 9) suggests that the example of bioethics is a good starting point, but recommends that ethics in AI requires going beyond the good of the individual and looking through the lens of human rights, and, indeed determining what is best for the whole of society. To do this there has to be a much broader inclusion of disciplines and organizations into the conversations to not only elicit the magnitude of the AI ethical dilemma and also help solve it. Researchers Kusters et al (2020, 5) conclude that AI research and development needs to be interdisciplinary because of the complexity of the ecosystem in which it is evolving and the dynamics of the ecosystem in which it is emerging; all of which necessitate the need for outcomes that are transparent, explainable, and accountable. In particular, AI needs to move beyond the science of computer engineering and embrace the standards of the scientific world. Indeed, AI research and development need to employee the very basis of the scientific method that address bias and ethical standards (Kusters, et al. 2020, 4).

We live in a world struggling to deal with past eras of unbridled technological advances that have and are still impacting humankind on every level. Just consider our inability to move beyond our reliance on a few major technological advances, such as, fossil fuels and plastics. While these technologies have served humanity well, the entirety of the impact on humans and the environment are breathtaking. The potential benefits and detriments of AI to all of society are just as powerful, perhaps more so, and because of this there is a moral imperative to address and control the AI before we have an insurmountable, if not fatal, problem to fix. Let the past serve to inform the future. As fossil fuels and plastics became increasingly embedded in our lives we ignored, even lived in denial in some cases, its cumulative effect on our planet. Now, they pose a seemingly insurmountable threat to nature and humankind. A cautionary tale, indeed. One that reinforces the need for the appropriate response to a future that is certainly uncertain; thus, as the analogy of Haggstrom (2016) warns, “Here be dragons!”

References

Agrawal, Ajay, Joshua S. Gans, and Avi Goldfarb. 2019. “Artificial Intelligence: The Amibguous Labor Market Impact of Automating Prediction.” Journal of Economic Perspectives 33 (2): 31-50.

Bostrom, Nick. 2017. Superintelligence: Paths, Dangers, Strategies. New York: Oxford University Press.

Coleman, Flynn. 2019. A Human Algorith: How Artificial Intelligence is Redefining Who We Are. Berkeley: Counterpoint.

Copeland, B. J. 2021. Artificial Intelligence. December 14. https://www.britannica.com/technology/artificial-intelligence.

Crawford, Kate. 2021. Atlas of AI. New Haven: Yale University Press.

Danaher, John. 2018. “Toward an Ethics of AI Assistants: An Initial Framework.” Philosophy & Technology 31 (4): 629-653. doi:doi:10.1007/s13347-018-0317-3.

Dimock, Michael. 2019. Defining Generations: Where Millennials End and Generation Z Begins. Pew Research Center. https://www.pewresearch.org/facttank/2019/01/17/where-millennials-end-and-generation-z-begins/.

Dubhashi, Devdatt, and Shalom Lappin. 2017. “AI Dangers: Imagined and Real.” Communications of the ACM, February: 43-45. https://cacm.acm.org/magazines/2017/2/212437-ai-dangers/fulltext.

Friend, Tad. 2018. “Superior Intelligence.” New Yorker, 44-51.

Frith, K. T., and B. Mueller. 2010. Advertising and Societies: Global Issues. New York: Lang.

Gherhes, V., and C. Obrad. 2018. “Technical and humanities students’ perspectives on the development and sustainability of artificial intelligence (AI).” Sustainability 10: 1-16.

Haggstrom, Olle. 2016. Here be Dragons: Science technology and the future of humanity. Oxford: Oxford University Press.

Heaven, Douglas. 2020. The White House wants to spend hundreds of millions more on AI research. February 11. https://www.technologyreview.com/2020/02/11/844891/the-white-house-will-spend-hundreds-of-millions-more-on-ai-research/.

Howell, David C. 2007. Statistical Methods for Psychology. 6. Belmont: Thomson Wadsorth.

Jeffrey, Thomas R. 2020. “Understanding College Student Perceptions of Artificial Intelligence.” Journal on Systemics, Cybernetics, and Informatics, 8-13. http://www.iiisci.org/journal/sci/issue.asp?is=ISS2002.

Jeffrey, Thomas R. 2021. “Understanding Generation Z Perceptions of Artificial Intelligence in Marketing and Advertising.” Advertising and Society Quarterly (Project Muse) 22 (4). doi:10.1353/asr.2021.0052.

Katyal, Sonia K. 2019. “Artificial Intelligence, Advertising, and Disinformation.” Advertising & Society Quarterly 20 (4). doi: doi.org/10.1353/asr.2019.0026.

Kose, Utku. 2018. “Are We Safe Enough in the Future of Artificial Intelligence? A Discussion on Machine Ethics and Artificial Intelligence Safety.” Semantic Scholar. May 18. https://www.semanticscholar.org/paper/Are-We-Safe-Enough-in-the-Future-of-Artificial-A-on-Kose/e42d3a980ab5ea47645b381b12a518d4439ea9b7.

Kusters, Remy, Dusan Misevic, Hugues Berry, Antoine Cully, Yann Le Cunff, Loic Dandoy, Natalia Díaz-Rodríguez, and et al. 2020. “Interdisciplinary Research in Artificial Intelligence: Challenges and Opportunities.” Frontiers in Big Data 3. doi:doi:10.3389/fdata.2020.577974.

Leslie, David. 2019. Understanding artificial intelligence ethics and safety: A guide for the responsible design and implementation of AI systems in the public sector. The Alan Turing Institute. doi:https://doi.org/10.5281/zenodo.3240529.

Markoff, John. 2016. Machines of Loving Grace. New York, NY: HarperCollins.

McMillan, James H. 2004. ducational Research: Fundamentals for the Consumer. Boston: Pearson Education.

Noble, Safiya U., and Sarah T. Roberts. 2016. “Targeting race in ads is nothing new, but the stakes are high.” USA Today, November 12. https://www.usatoday.com/story/tech/columnist/2016/11/12/targeting-race-ads-nothing-new-but-stakes-high/93638386/.

Padios, Jan M. 2017. “Mining the Mind: Emotional Extraction, Productivity, and Predictability in the Twenty-First Century.” Cultural Studies 31 (2): 205-231. doi:doi.org/10.1080/09502386.2017.1303426.

Pedhazur, Elazur J., and Liora Pedhazur Schmelkin. 1991. Measurement, Design, and Analysis: An Integrated Approach. Hillsdale: Lawrence Erlbaum Associates, Inc.

Pfaff, Anthony. 2019. “The Ethics of Acquiring Disruptive Technologies : Artificial Intelligence, Autonomous Weapons, and Decision Support Systems.” PRISM 128-145.

Rao, Anand, John Simmons, and Pawan Kumar. 2019. Global Artificial Intelligence Study: Exploiting the AI Revolution. PwC. https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html.

Schwab, Klaus. 2016. The Fourth Industrial Revolution. World Economic Forum.

Schwartz, Oscar. 2019. “In 2016, Microsoft’s Racist Chatbot Revealed the Dangers of Onine Conversations.” IEEE Spectrum. November 25. Accessed December 18, 2019. https://spectrum.ieee.org/tech-talk/artificial-intelligence/machine-learning/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation.

Tong, Siliang, Xueming Luo, and Bo Xu. 2020. “Personalized Mobile Marketing Strategies.” Journal of the Academy of Market Science 48 (1): 64-78. doi:doi.org/10.1007/s11747-019-00693-3.

Turow, Joseph. 2017. The Aisles Have Eyes: How Retailers Track Your Shopping, Strip Your Privacy, and Define Your Power. New Haven: Yale University Press.

Urban, Glen, Artem Timoshenko, Paramveer Dhillon, and John R. Hauser. 2020. “Is Deep Learning a Game Changer for Marketing Analytics?” MIT Sloan Management Review 61 (2): 71-76. https://sloanreview.mit.edu/article/is-deep-learning-a-game-changer-for-marketing-analytics/.

Zuboff, Shoshana. 2019. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. PublicAffairs: PublicAffairs.

Appendix

Frequency of Participant Demographic Characteristics |

|||

| Characteristic | Category | n | Percent of Total |

| Gender | Female Male |

86 61 |

58.5%

41.5% |

| Age | 18 years of age or less

19 years of age 20 years of age 21 years of age 22 years of age |

21

32 33 37 24 |

14.3%

21.8% 22.4% 25.2% 16.3% |

| Ethnicity

|

American Indian/Native Alaskan or Hawaiian

Asian Black/African American Hispanic or Latino White |

4

14 8 8 113 |

2.7%

9.5% 5.4% 5.4% 76.9% |

| College Rank | Freshman

Sophomore Junior Senior |

35

30 42 40 |

23.8%

20.4% 28.6% 27.2% |

| College Major | Arts and Humanities

Business and Technology Education Mathematics and Natural Sciences Nursing, Healthcare, and Social Work Social Sciences, History, and Theology Other |

40

21 16 6 22 23 19 |

27.2%

14.3% 10.9% 4.1% 15.0% 15.6% 12.9% |